Article

Google Exposes AI-Powered Malware

Nov 17, 2025

Arete Analysis

Cybersecurity Trends

Earlier this month, Google released a report claiming to have observed the first AI-augmented malware being used in real-world attacks. The discovery marks a significant advancement in the implementation of generative AI by cybercriminals. While security researchers have been aware of the adversarial use of AI for some time, this would be the first-known instance of malware directly incorporating AI into its functionality during execution.

What’s Notable and Unique

While still in its nascency, an example of this malware, PROMPTSTEAL, was deployed by Russia in an operational environment against Ukraine, indicating the utility of AI capabilities and a desire to leverage them immediately.

The development of AI-enabled malware is not unique to nation-state actors. Some of the malware reported by Google was developed with clear financial motivations in mind, and several AI-augmented tools are being advertised on cybercriminal forums.

Analyst Comments

Polymorphic malware, which is malware capable of changing its code to evade detection, is not a novel concept. The first examples of polymorphic malware date back to the early 1990s. However, the mechanisms by which those examples achieved their objectives were typically modifications in obfuscation or encryption routines, rather than the introduction of new code and functionality. Recently, however, the relative sophistication in threat actors’ use of AI has increased drastically throughout 2025, evolving from simple script generation to incorporation into every phase of the attack lifecycle. At the present pace, AI-enabled malware will not likely remain in this fledgling state for long.

Sources

Back to Blog Posts

Article

Feb 20, 2026

Threat Actors Leveraging Gemini AI for All Attack Stages

State-backed threat actors are leveraging Google’s Gemini AI as a force multiplier to support all stages of the cyberattack lifecycle, from reconnaissance to post-compromise operations. According to the Google Threat Intelligence Group (GTIG), threat actors linked to the People’s Republic of China (PRC), Iran, North Korea, and other unattributed groups have misused Gemini to accelerate target profiling, synthesize open-source intelligence, identify official email addresses, map organizational structures, generate tailored phishing lures, translate content, conduct vulnerability testing, support coding tasks, and troubleshoot malware development. Cybercriminals are increasingly exploring AI-enabled tools and services to scale malicious activities, including social engineering campaigns such as ClickFix, demonstrating how generative AI is being integrated into both espionage and financially motivated threat operations.

What’s Notable and Unique

Threat actors are leveraging Gemini beyond basic reconnaissance, using it to generate polished, culturally nuanced phishing lures and sustain convincing multi-turn social engineering conversations that minimize traditional red flags.

In addition, threat actors rely on Gemini for vulnerability research, malware debugging, code generation, command-and-control development, and technical troubleshooting, with PRC groups emphasizing automation and vulnerability analysis, Iranian actors focusing on social engineering and malware development, and North Korean actors prioritizing high-fidelity target profiling.

Beyond direct operational support, adversaries have abused public generative AI platforms to host deceptive ClickFix instructions, tricking users into pasting malicious commands that deliver macOS variants of ATOMIC Stealer.

AI is also being integrated directly into malware development workflows, as seen with CoinBait’s AI-assisted phishing kit capabilities and HonestCue’s use of the Gemini API to dynamically generate and execute in-memory C# payloads.

Underground forums show strong demand for AI-powered offensive tools, with offerings like Xanthorox falsely marketed as custom AI but actually built on third-party commercial models integrated through open-source frameworks such as Crush, Hexstrike AI, LibreChat-AI, and Open WebUI, including Gemini.

Analyst Comments

The increasing misuse of generative AI platforms like Gemini highlights a rapidly evolving threat landscape in which state-backed and financially motivated actors leverage AI as a force multiplier for reconnaissance, phishing, malware development, and post-compromise operations. At the same time, large-scale model extraction attempts and API abuse demonstrate emerging risks to AI service integrity, intellectual property, and the broader AI-as-a-Service ecosystem. While these developments underscore the scalability and sophistication of AI-enabled threats, continued enforcement actions, strengthened safeguards, and proactive security testing by providers reflect ongoing efforts to mitigate abuse and adapt defenses in response to increasingly AI-driven adversaries.

Sources

GTIG AI Threat Tracker: Distillation, Experimentation, and (Continued) Integration of AI for Adversarial Use

Read More

Article

Feb 12, 2026

2025 VMware ESXi Vulnerability Exploited by Ransomware Groups

Ransomware groups are actively exploiting CVE‑2025‑22225, a VMware ESXi arbitrary write vulnerability that allows attackers to escape the VMX sandbox and gain kernel‑level access to the hypervisor. Although VMware (Broadcom) patched this flaw in March 2025, threat actors had already exploited it in the wild, and CISA recently confirmed that threat actors are exploiting CVE‑2025‑22225 in active campaigns.

What’s Notable and Unique

Chinese‑speaking threat actors abused this vulnerability at least a year before disclosure, via a compromised SonicWall VPN chain.

Threat researchers have observed sophisticated exploit toolkits, possibly developed well before public disclosure, that chain this bug with others to achieve full VM escape. Evidence points to targeted activity, including exploitation via compromised VPN appliances and automated orchestrators.

Attackers with VMX level privileges can trigger a kernel write, break out of the sandbox, and compromise the ESXi host. Intrusions observed in December 2025 showed lateral movement, domain admin abuse, firewall rule manipulation, and staging of data for exfiltration.

CISA has now added CVE-2025-22225 to its Known Exploited Vulnerabilities (KEV) catalog, underscoring ongoing use by ransomware attackers.

Analyst Comments

Compromise of ESXi hypervisors significantly amplifies operational impact, allowing access to and potential encryption of dozens of VMs simultaneously. Organizations running ESXi 7.x and 8.x remain at high risk if patches and mitigations have not been applied. Therefore, clients are recommended to apply VMware patches from VMSA‑2025‑0004 across all ESXi, Workstation, and Fusion deployments. Enterprises are advised to assess their setups in order to reduce risk, as protecting publicly accessible management interfaces is a fundamental security best practice.

Sources

CVE-2025-22225 in VMware ESXi now used in active ransomware attacks

The Great VM Escape: ESXi Exploitation in the Wild

VMSA-205-004: VMware ESXi, Workstation, and Fusion updates address multiple vulnerabilities (CVE-205-22224, CVE-2025-22225, CVE-2025-22226)

Read More

Article

Feb 5, 2026

Ransomware Trends & Data Insights: January 2026

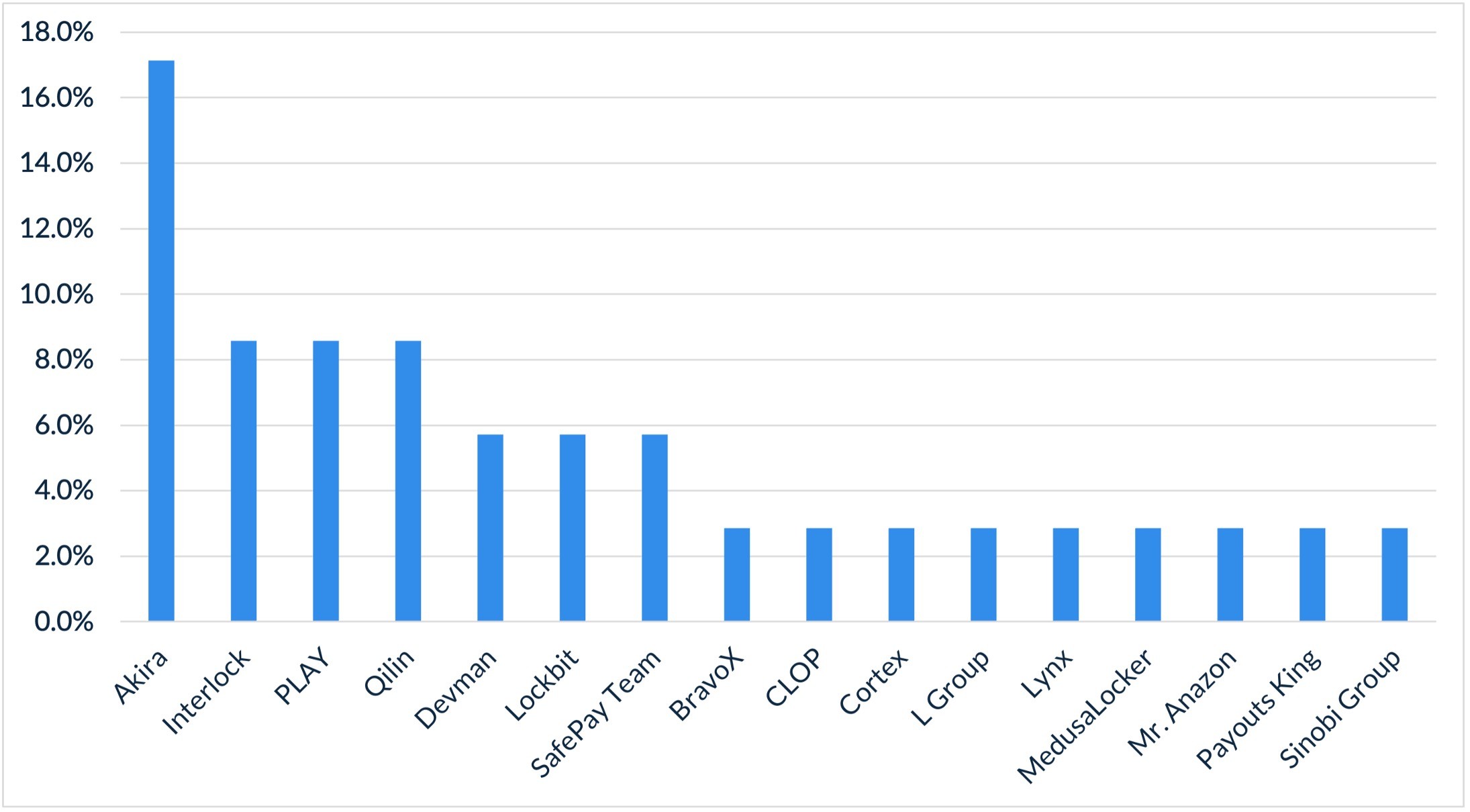

Although Akira was once again the most active ransomware group in January, the threat landscape was more evenly distributed than it was throughout most of 2025. In December 2025, the three most active threat groups accounted for 57% of all ransomware and extortion activity; in January, the top three accounted for just 34%. Akira’s dominance also decreased to levels more consistent with early 2025, as the group was responsible for almost a third of all attacks in December but just 17% in January.

The number of unique ransomware and extortion groups observed in January increased slightly, to 17, up from 14 in December. It is too early to assess whether this trend will be the new normal for 2026. It is also worth noting that overall activity in January was lower than in previous months, consistent with what Arete typically observes at the beginning of a new year.

Figure 1. Activity from all threat groups in January 2026

Throughout the month of January, analysts at Arete identified several distinct trends behind the threat actors perpetrating cybercrime activities:

In January, Arete observed the reemergence of the LockBit Ransomware-as-a-Service (RaaS) group, which deployed an updated “LockBit 5.0” variant of its ransomware. LockBit first announced the 5.0 version on the RAMP dark web forum in early September 2025, coinciding with the group’s six-year anniversary. The latest LockBit 5.0 variant has both Windows and Linux versions, with notable improvements, including anti-analysis features and unique 16-character extensions added to each encrypted file. However, it remains to be seen whether LockBit will return to consistent activity levels in 2026.

The ClickFix social engineering technique, which leverages fake error dialog boxes to deceive users into manually executing malicious PowerShell commands, continued to evolve in unique ways in January. One campaign reported in January involved fake Blue Screen of Death (BSOD) messages manipulating users into pasting attacker-controlled code. During the month, researchers also documented a separate campaign, dubbed “CrashFix,” that uses a malicious Chrome browser extension-based attack vector. It crashes the web browser, displays a message stating the browser had "stopped abnormally," and then prompts the victim to click a button that executes malicious commands.

Also in January, Fortinet confirmed that a new critical authentication vulnerability affecting its FortiGate devices is being actively exploited. The vulnerability, tracked as CVE-2026-24858, allows attackers with a FortiCloud account to log in to devices registered to other account owners due to an authentication bypass flaw in devices using FortiCloud single sign-on (SSO). This recent activity follows the exploitation of two other Fortinet SSO authentication flaws, CVE-2025-59718 and CVE-2025-59719, which were disclosed in December 2025.

Source

Arete Internal

Read More

Article

Feb 2, 2026

New FortiCloud SSO Vulnerability Exploited

Fortinet recently confirmed that its FortiGate devices are affected by a new critical authentication vulnerability that is being actively exploited. The vulnerability, tracked as CVE-2026-24858, allows attackers with a FortiCloud account to log in to devices registered to other account owners due to an authentication bypass flaw in devices using FortiCloud single sign-on (SSO). CISA added the vulnerability to its Known Exploited Vulnerabilities catalogue and gave federal agencies just three days to patch, which requires users to upgrade all devices running FortiOS, FortiManager, FortiAnalyzer, FortiProxy, and FortiWeb to fixed versions. This recent activity follows the exploitation of two other SSO authentication flaws, CVE-2025-59718 and CVE-2025-59719, which were disclosed last month.

What’s Notable and Unique

There are strong indications that much of the recent exploitation activity was automated, with attackers moving from initial access to account creation within seconds.

As observed in December 2025, the attackers’ primary target appears to be firewall configuration files, which contain a trove of information that can be leveraged in future operations.

The threat actors in this campaign favor innocuous, IT-themed email and account names, with malicious login activity originating from cloud-init@mail[.]io and cloud-noc@mail[.]io, while account names such as ‘secadmin’, ‘itadmin’, ‘audit’, and others are created for persistence and subsequent activity.

Analyst Comments

This is an active campaign, and the investigation into these attacks is ongoing. Organizations relying on FortiGate devices should remain extremely vigilant, even after following patching guidance. With threat actors circumventing authentication, it’s crucial to monitor for and alert on anomalous behavior within your environment, such as the unauthorized creation of admin accounts, the creation or modification of access policies, logins outside normal working hours, and anything that deviates from your security baseline.

Sources

Administrative FortiCloud SSO authentication bypass

Multiple Fortinet Products’ FortiCloud SSO Login Authentication Bypass

Arctic Wolf Observes Malicious Configuration Changes On Fortinet FortiGate Devices via SSO Accounts

Arctic Wolf Observes Malicious SSO Logins on FortiGate Devices Following Disclosure of CVE-2025-59718 and CVE-2025-59719

Read More